When Color Constancy Goes Wrong:

Correcting Improperly White-Balanced Images

Mahmoud Afifi1, Brian Price2, Scott Cohen2, and Michael S. Brown1

1York University, 2Adobe Research

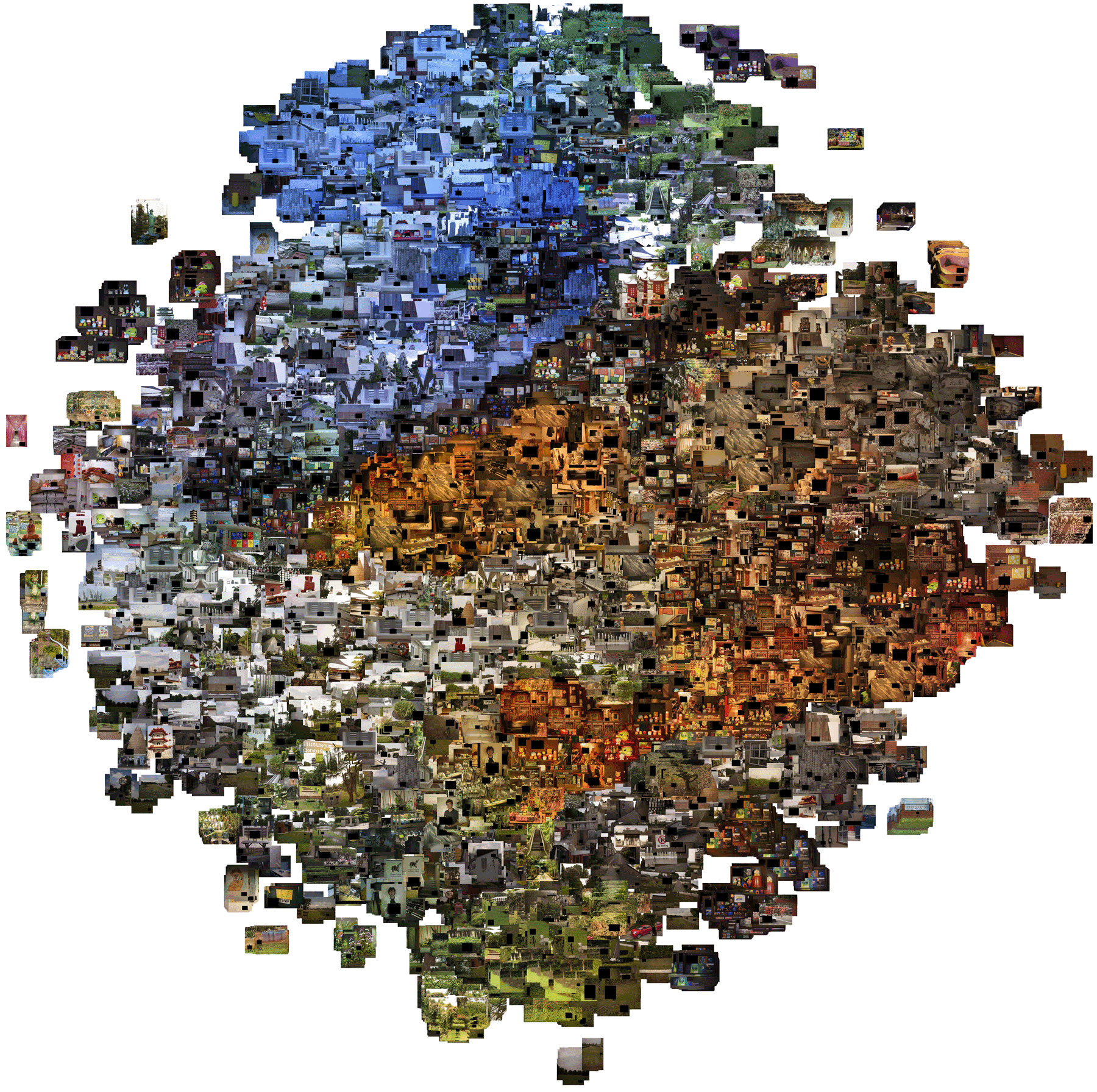

We have generated a dataset of 65,416 sRGB images rendered using different white-balance presets in the camera (e.g., Fluorescent, Incandescent, Dayligh) with different camera picture styles (e.g., Vivid, Standard, Neutral, Landscape). For each sRGB rendered image, we provide a target white-balanced image. To produce the correct target image, we manually select the "ground-truth" white from the middle gray patches in the color rendition chart, followed by applying a camera-independent rendering style (namely, Adobe Standard). The dataset is divided into two sets: intrinsic set (Set 1) and extrinsic set (Set 2).

In addition to our main dataset, the Rendered WB dataset, we rendered the Cube+ dataset in the same manner. Here, we also provide the correct target images. You can find the results of this additional set in the Results tab. Additionally, we rendered the MIT-Adobe FiveK Dataset with different WB settings. We could not render the correct target image, as this dataset has no calibration object placed in each scene. We provide this set for other purposes than evaluating post-capture white-balance correction methods. In total, our Rendered WB dataset and the additional rendered sets have over 100,000 images.

Please, cite our paper if you use any of the following image sets.

The Rendered WB dataset (Set 1)

Set 1 contains 62,535 rendered images and the corresponding ground-truth images. The images were captured by seven DSLR cameras.

Please, check the get_metadata function to easily get the ground-truth filename of any input image. For training and testing processes, you should mask out the color chart from images.

For each rendered image, we provide a metadata file that describes the color chart coordinates and the colors of each patch. You can check our source code to mask out

the color chart pixels from here. Also, we provide another version of Set1 after masking out the color chart from all images.

However, you still need to check our code to get the number of masked-out pixels for a fair evaluation. In our paper, we evaluate our model using this dataset using three-fold validation. Here, we

give the exact filenames used for our cross-validation.

Set 1 contains 62,535 rendered images and the corresponding ground-truth images. The images were captured by seven DSLR cameras.

Please, check the get_metadata function to easily get the ground-truth filename of any input image. For training and testing processes, you should mask out the color chart from images.

For each rendered image, we provide a metadata file that describes the color chart coordinates and the colors of each patch. You can check our source code to mask out

the color chart pixels from here. Also, we provide another version of Set1 after masking out the color chart from all images.

However, you still need to check our code to get the number of masked-out pixels for a fair evaluation. In our paper, we evaluate our model using this dataset using three-fold validation. Here, we

give the exact filenames used for our cross-validation.

Input images: Part1 | Part2 | Part3 | Part4 | Part5 | Part6 | Part7 | Part8 | Part9 | Part10

Input images [a single ZIP file]: Download (PNG lossless compression) | Download (JPEG) | Google Drive Mirror (JPEG)

Input images (without color chart pixels): Part1 | Part2 | Part3 | Part4 | Part5 | Part6 | Part7 | Part8 | Part9 | Part10

Input images (without color chart pixels) [a single ZIP file]: Download (PNG lossless compression) | Download (JPEG) | Google Drive Mirror (JPEG)

Augmented images (without color chart pixels): Download (rendered with additional/rare color temperatures)

Ground-truth images: Download

Ground-truth images (without color chart pixels): Download

Metadata files: Input images | Ground-truth images

Folds: Download

The Rendered WB dataset (Set 2)

Set 2 contains 2,881 rendered images and the corresponding ground-truth images provided for testing on different camera models.

This set includes images taken by four different mobile phones and one DSLR camera. We cropped the color chart from each image.

Set 2 contains 2,881 rendered images and the corresponding ground-truth images provided for testing on different camera models.

This set includes images taken by four different mobile phones and one DSLR camera. We cropped the color chart from each image.

Input images: Download

Ground-truth images: Download

The rendered version of the Cube+ dataset

This additional set contains 10,242 rendered images and the corresponding ground-truth images. The images were originally rendered from the Cube+ dataset.

Please, check the get_metadata function to easily get the ground-truth filename of any input image. We masked out the calibration object from all images. However, you should consider the number of removed calibration object's pixels in the evaluation process.

Please, check our evaluation code from here for a fair comparison.

This additional set contains 10,242 rendered images and the corresponding ground-truth images. The images were originally rendered from the Cube+ dataset.

Please, check the get_metadata function to easily get the ground-truth filename of any input image. We masked out the calibration object from all images. However, you should consider the number of removed calibration object's pixels in the evaluation process.

Please, check our evaluation code from here for a fair comparison.

Input images: Download

Ground-truth images: Download

The rendered version of the MIT-Adobe FiveK dataset

This set contains 29,980 rendered images. We could not render ground-truth images, as there is no calibration object in the original raw-RGB images.

We provide this set for other purposes than evaluating post-capture white-balance correction.

This set contains 29,980 rendered images. We could not render ground-truth images, as there is no calibration object in the original raw-RGB images.

We provide this set for other purposes than evaluating post-capture white-balance correction.

Input images: Download

Set 1 contains 62,535 rendered images and the corresponding ground-truth images. The images were captured by seven DSLR cameras.

Please, check the

Set 1 contains 62,535 rendered images and the corresponding ground-truth images. The images were captured by seven DSLR cameras.

Please, check the

Set 2 contains 2,881 rendered images and the corresponding ground-truth images provided for testing on different camera models.

This set includes images taken by four different mobile phones and one DSLR camera. We cropped the color chart from each image.

Set 2 contains 2,881 rendered images and the corresponding ground-truth images provided for testing on different camera models.

This set includes images taken by four different mobile phones and one DSLR camera. We cropped the color chart from each image.

This additional set contains 10,242 rendered images and the corresponding ground-truth images. The images were originally rendered from the

This additional set contains 10,242 rendered images and the corresponding ground-truth images. The images were originally rendered from the